Oracle Data Pump (Export - Part 2)

- Shashi Kallae

- Jan 30, 2024

- 5 min read

As per Oracle, the Data Pump supports four methods of data movement, each with different performance and functional characteristics. These methods are described as follows:

Data File Copying (Transportable Tablespaces): This is the fastest method of moving data from one database to another, as it involves copying the data files to the target database without interpretation or alteration of the data. This method is often used for moving entire tablespaces or databases.

To perform the Data File copying, the following steps can be performed (Disclaimer: these steps may change as per your requirements and prerequisites, but always consult Oracle support for the best possible methods. The below commands or examples are just for educational purposes only):

Set Tablespaces to Read-Only.

ALTER TABLESPACE tablespace_name READ ONLY;

This SQL statement sets the tablespace to the read-only mode in preparation for the transportable tablespace operation.2. Export the Tablespace Metadata.

expdp system/password DIRECTORY=dpump_dir1 TRANSPORT_TABLESPACES=tablespace_name DUMPFILE=tablespace_name.dmp3. Copy the Data Files.

Copy the data files using rsync or sftp via ssh or sftp gui tool or scp.4. Import the Tablespace Metadata. This command imports the tablespace metadata into the target database and generates a SQL file that contains the necessary SQL statements to perform the import.

impdp system/password DIRECTORY=dpump_dir1 DUMPFILE=tablespace_name.dmp SQLFILE=tablespace_name.sqlDirect Path Load and Unload: After data file copying, direct path is the next fastest method of moving data. In this method, the SQL layer of the database is bypassed, and rows are moved to and from the dump file with minimal interpretation of the data.

External Tables: When data file copying is not selected, and the data cannot be moved using a direct path, the mechanism of the external table is used. This method allows data to be read from external files and loaded into the database.

To achieve the data file copy using external tables, the following is done (Disclaimer: these steps may change as per your requirements and prerequisites, but always consult Oracle support for the best possible methods. The below commands or examples are just for educational purposes only):

Create an External Table

CREATE TABLE my_external_table

(

column1 NUMBER,

column2 VARCHAR2(50),

column3 DATE

)

ORGANIZATION EXTERNAL

(

TYPE ORACLE_DATAPUMP

DEFAULT DIRECTORY data_pump_directory

LOCATION ('datafile1.dat', 'datafile2.dat')

)

REJECT LIMIT UNLIMITED;OR

CREATE TABLE my_external_table

ORGANIZATION EXTERNAL

(TYPE ORACLE_DATAPUMP

DEFAULT DIRECTORY data_pump_directory

LOCATION ('datafile1.dat', 'datafile2.dat'))

AS

SELECT

column1_name column1_datatype,

column2_name column2_datatype,

...

FROM my_source_table;Here,

1. “my_external_table” should be any desired table name you can think of.

2. "column1 NUMBER" (Specify the columns and their data types).

3. The "ORGANIZATION EXTERNAL" clause mentions that this is an external table.

4. TYPE “ORACLE_DATAPUMP" indicates the external table type.

5. "DEFAULT DIRECTORY" points to the Oracle directory where the external files (example: /home/oracle/) are located.

6. “LOCATION" specifies the external file names ('datafile1.dat', 'datafile2.dat') or patterns.

7. "REJECT LIMIT UNLIMITED" allows for unlimited rejected records.

8. “my_source_table” is the source table from which you unload the data.2. Query the external table.

select count(*) from my_external_table;

select * from my_external_table;Conventional Path: This is the slowest method of moving data. It involves interpreting and altering the data during the movement process.

These methods provide flexibility in choosing the most suitable approach for different data movement requirements, considering factors such as speed, resource utilization, and the nature of the data being moved or copied over.

Some Best Practices For Oracle Data Pump

Best Practices for Oracle Data Pump

DATAPUMP_EXP_FULL_DATABASE and DATAPUMP_IMP_FULL_DATABASE roles are required for several export and import operations.

Do not invoke export SYS as SYSDBA, because SYSDBA is used internally and has special privileges. Under the guidance of Oracle technical support, one should use SYSDBA to export and also to Import.

Use a parameter file. Make a consistent Data Pump Export. Exclude Statistics from Export and Import. Include Diagnostic parameters during Export and Import.

Use the Data Pump Parallel process parameter to create additional background processes and sessions during an Export and Import.

Specify wildcards to create multiple dump files.

Exclude Statistics from Export and Import. Oracle recommends not exporting statistics during export to improve export and import performance, even accounting for the need to gather statistics after the import.

Set resource utilization appropriately by restricting resource usage for a Data Pump.

Use a Network Link for servers on different operating systems and storage. Use secure LOBs.

The database compatibility affects the Data Pump export and Import operations, so set the database compatibility using the “VERSION” parameter.

Compression of data and metadata during the exports can reduce the size of the dump files. Also, it can help the size of the data stream during a Network Mode Import.

Recommended compression parameters are “COMPRESSION=ALL and COMPRESSION_ALGORITHM=MEDIUM."

Compression Algorithms Supported by Oracle Data Pump

Compression Parameter is used with expdp to compress the dump file that is generated. The Oracle Data Pump supports several compression algorithms for data movements such as,

BASIC ALGORITHM: Basic algorithm provides good compression without impacting the performance of the Data Pump process and the system process such as CPU, RAM etc. Advanced compression license required for this operation.

LOW ALGORITHM: This algorithm is used when CPU utilization and related resources are considered more important than the compression ratio. Here “compression_algorithm=low” parameter is used where the server is CPU bound. Advanced compression license required for this operation.

MEDIUM ALGORITHM: This algorithm offers similar functionalities to that of BASIC algorithm, balancing compression ratio to that of CPU. Advanced compression license required for this operation.

HIGH ALGORITHM: This compression Offers maximum compression but with higher CPU utilization. Advanced compression license required for this operation.

Additionally, the compression parameter can be used to set only metadata, or only data, or both during an expdp (export) operation.

COMPRESSION_ALGORITHM parameter

COMPRESSION_ALGORITHM Valid keyword values: BASIC, LOW, MEDIUM and HIGH.

compression_algorithm=basic: Offers good compression and is not too CPU intensive.

compression_algorithm=low: Offers compression without impacting the CPU.

compression_algorithm=medium: Similar to "basic” compression, balancing compression ratio to that of CPU.

compression_algorithm=high: The most CPU-intensive option, but may result in the smallest export dump file.

A Sample Parameter File!

$ cat exampledata_compress.par

dumpfile=exampledata_compressedfile.dmp

logfile=exampledata.log

directory=EXPDIR

tables=DBACLASS.OBJECT_LIST

compression=ALL

COMPRESSION_ALGORITHM=LOWA Sample Parameter File!

$ cat exampledata_compress.par

dumpfile=exampledata_compressedfile.dmp

logfile=exampledata.log

directory=EXPDIR

tables=DBACLASS.OBJECT_LIST

compression=ALL

COMPRESSION_ALGORITHM=MEDIUMA Sample Parameter File!

$ cat exampledata_compress.par

dumpfile=exampledata_compressedfile.dmp

logfile=exampledata.log

directory=EXPDIR

tables=DBACLASS.OBJECT_LIST

compression=ALL

COMPRESSION_ALGORITHM=HIGHexpdp parfile=exampledata_compress.parCOMPRESSION Parameter

The below examples demonstrate how compression parameter can be used during Oracle data pump export. Advanced compression license is required for these operations when compression is used.

COMPRESSION=ALL

This option compresses both metadata and data. It provides good compression without impacting performance.

expdp UID/PASSWD DIRECTORY=EXAMPLEDUMP_DIR DUMPFILE=HR_ALL.dmp COMPRESSION=ALLCOMPRESSION=DATA_ONLY

expdp UID/PASSWD DIRECTORY=EXAMPLEDUMP_DIR DUMPFILE=HR_DATAONLY.dmp COMPRESSION=DATA_ONLYCOMPRESSION=METADATA_ONLY

expdp UID/PASSWD DIRECTORY=EXAMPLEDUMP_DIR DUMPFILE=HR_METADATAONLY.dmp COMPRESSION=METADATA_ONLYCOMPRESSION=NONE

expdp UID/PASSWD DIRECTORY=EXAMPLEDUMP_DIR DUMPFILE=HR_NOCOMPRESSION_USED.dmp COMPRESSION=NONESummary of Oracle Data Pump Components

Oracle Data Pump consists of three components: expdp, impdp, command-line clients.

The expdp and impdp clients use the procedures provided in the DBMS_DATAPUMP PL/SQL package to execute the export and import commands.

expdp is used to start the Oracle Data Pump export utility, while, impdp starts the Oracle Data Pump Import utility.

The DBMS_METADATA package provides a centralized facility for extracting, manipulating, and re-creating dictionary metadata.

Oracle recommends that you instead use directory objects in place of symbolic links.

If Tables have disabled Unique Indexes, then the Data pump doesn't load the Tables.

The indexes should be dropped before loading the data into the Tables and then recreated.

Data Pump API: The DBMS_DATAPUMP PL/SQL package is also known as the Data Pump API.

Metadata API: the DBMS_METADATA PL/SQL package is also known as the Metadata API.

Pro-Tip

Oracle recommends that you instead use directory objects in place of symbolic links. Otherwise, you may encounter errors such as “ORA-29283: invalid file operation: path traverses a symlink."

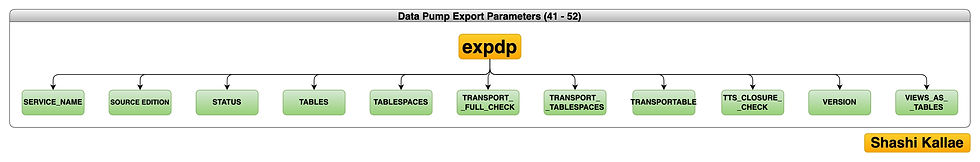

Oracle Data Pump Command-Line Parameters

Conclusion

Oracle Data Pump is a versatile and powerful tool for managing data and metadata in Oracle Databases. The Oracle Data Pump Export feature from the tool is used to export the data, but when running the Oracle Data Pump in a production environment, consider the potential impact on the performance of the databases. It can impact the database activities like reads, updates, or inserts. Oracle recommends executing export in prod environments outside business hours without impacting dependent ETL jobs and users. Check stats before and after the export. Ensure the checksum validity on the data or dump files after the export.

Comments